Human-Robot Trust

When you meet someone for the very first time, we can intuitively answer “How much can I trust this person?” Trust is a complex social-emotional concept, but this inference can be quickly formed with an accuracy that is better than chance. In these situations where a person’s past behaviors or reputation are unknown, we rely on other possible sources of information to infer a person’s motivations. Nonverbal behaviors are a source of information about such underlying intentions, goals, and values. Much of our communication is channeled through our facial expressions, body gestures, gaze directions, and many other nonverbal behaviors. Our ability to express and recognize these nonverbal expressions is at the core of social intelligence.

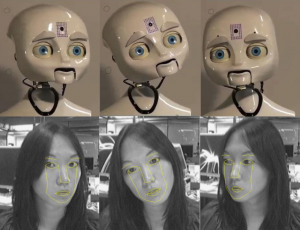

But what are the nonverbal behaviors that constitute a signal related to the trustworthiness of a novel person? The psychology department at Northeastern University identified a candidate set of nonverbal cues–face touching, arms crossed, leaning backward, and hand touching–that was hypothesized to be indicative of untrustworthy behavior. However, in order to confirm and further validate such findings, a common practice in social psychology is to employ a human actor to perform certain nonverbal cues and study their effects in a human-subjects experiment. A fundamental challenge inherent in this research design is that people regularly emit cues outside of their own awareness, which makes it difficult even for trained professional actors to express specific cues in a reliable fashion. Our strategy for meeting this challenge was to employ a social robotics platform. By utilizing a humanoid robot, we took advantage of its programmable behavior to control exactly which cues are emitted to each participant (see example video above). In collaboration with the Social Emotions Lab at NEU, Johnson Graduate School of Management, and Cornell University, we found through a human-subjects experiment that the robot’s expression of the hypothesized nonverbal cues resulted in participants perceiving the robot as a less trustworthy agent.

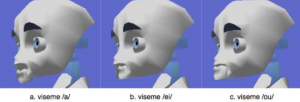

We developed a system that allowed the psychologists to remotely control our complex (38 skeletal jointed) humanoid robot. Our goal was to enable them to have a natural conversation with another person through this robotic medium. The primary technical challenge was to provide expressive but precisely-timed control of the robot’s motions to non-expert users. By combining various fully-autonomous behaviors along with semi-autonomous interfaces, we developed a tele-robotic interface to control the robot’s face and body motions for a human-subjects experiment.

Noteworthy

- New York Times Blog

Who’s Trustworthy? A Robot Can Help Teach Us. - Engadget

Nexi robot helps NEU track effects of shifty body language - Boston Globe

Robots may Furnish Lesson in Human Trust - NSF News

Trusty Robot Helps Us Understand Human Social Cues - Psychological Science News

Who (and What) Can You Trust? How Non-Verbal Cues Can Predict a Person’s (and a Robot’s) Trustworthiness